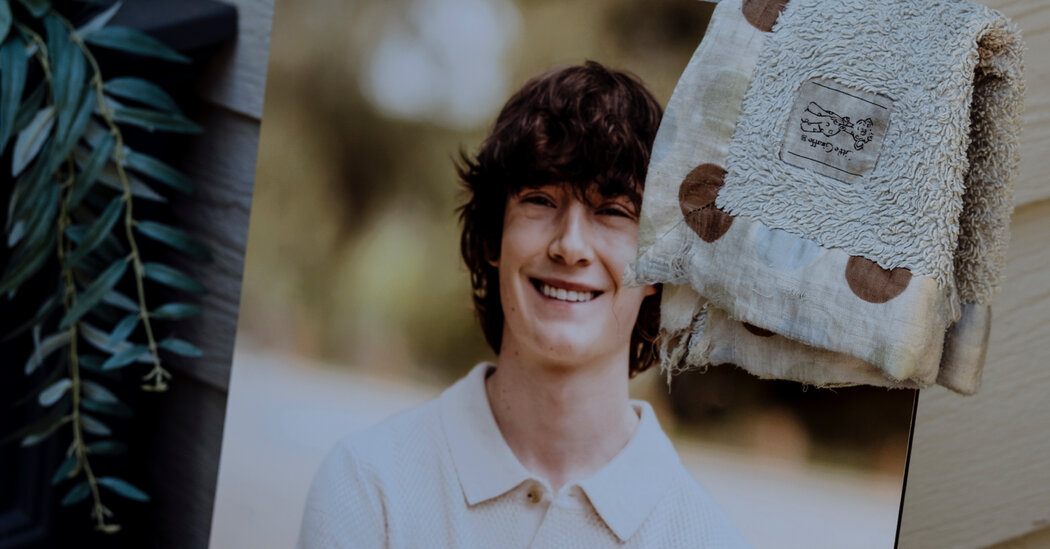

The case of a teenager confiding in ChatGPT about suicidal thoughts highlights the growing reliance on AI chatbots for emotional support. While such interactions can be helpful in some situations, they also raise significant concerns about the limitations and potential dangers of relying solely on AI for mental health assistance. This underscores the need for greater awareness, responsible AI development, and readily available human intervention alongside AI-based support systems.

💡 Insights

There’s a clear market need for integrated mental health platforms combining AI chatbots with human oversight. Opportunities exist for startups that:

- Develop systems that seamlessly integrate human intervention when necessary.

- Create AI models trained to identify and respond appropriately to crisis situations.

- Develop educational resources on responsible AI chatbot usage for both users and professionals. This addresses the critical need for safe and effective AI in mental health.